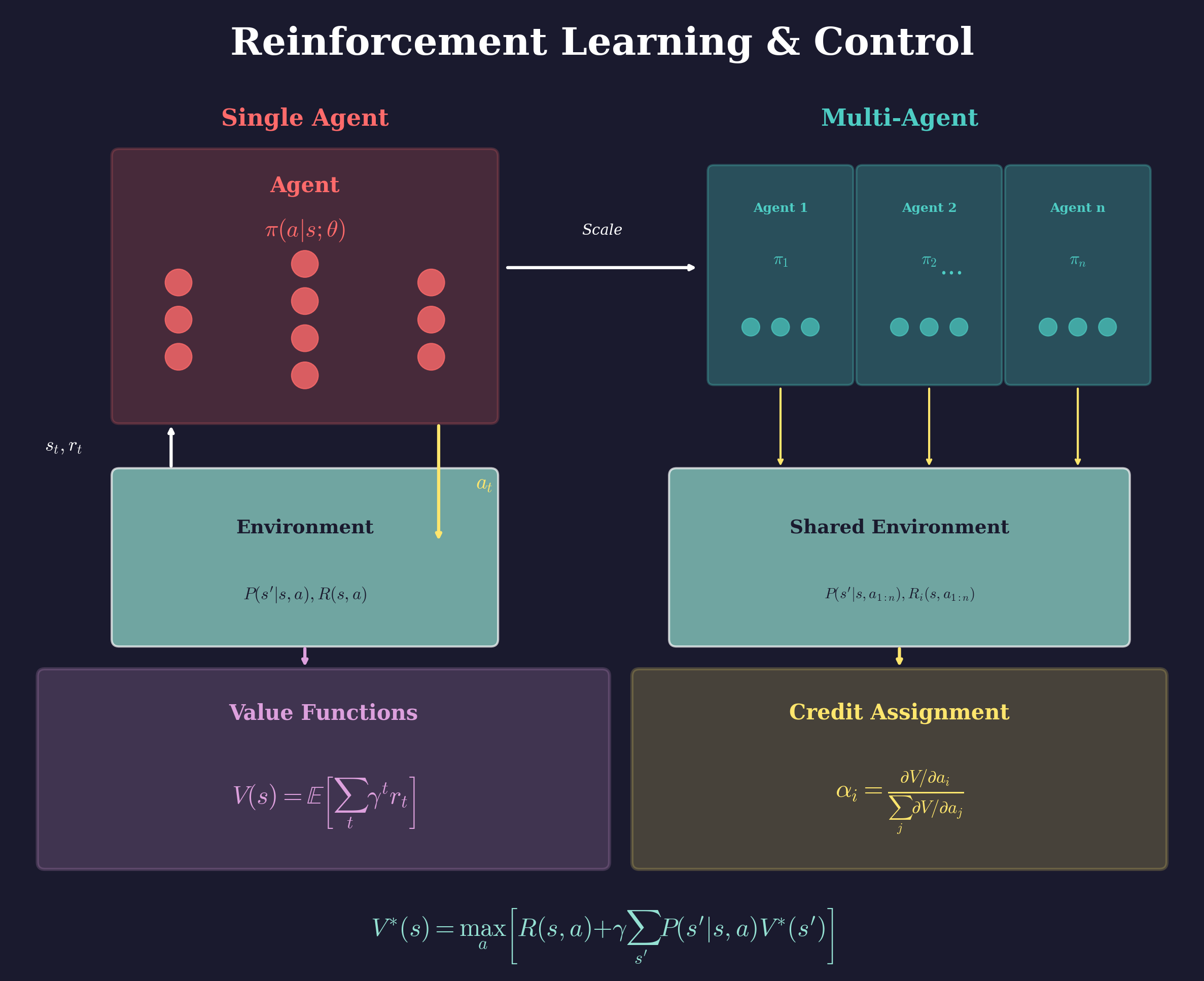

Reinforcement learning enables autonomous decision-making in complex, uncertain environments. My research spans theoretical foundations, algorithmic development, and applications to scientific and societal systems.

Image-Based Control: Cooperative deep Q-learning frameworks for environments providing high-dimensional image feedback, enabling agents to learn control policies directly from visual observations without hand-crafted state representations.

Optimal Adaptive Control: Theoretical foundations for optimal control of systems with partial model uncertainty and state delays, bridging classical control theory with modern reinforcement learning. This work provides stability guarantees for learning-based controllers.

Agent-Based Modeling: Applying RL and agent-based simulation to understand equity effects of climate change on communities, developing lightweight decision support frameworks for climate resilience planning in urban environments like Chicago.

Educational Resources: Contributing to the research community through accessible introductions to reinforcement learning, including a book chapter in “Methods and Applications of Autonomous Experimentation” that bridges RL theory with scientific applications.

These methods enable autonomous systems to learn optimal behaviors in complex environments while maintaining safety and performance guarantees critical for real-world deployment.

Publications

- Introduction to Reinforcement Learning - Book Chapter, Chapman and Hall/CRC 2023

- Optimal Adaptive Control of Partially Uncertain Linear Continuous-Time Systems with State Delay - Book Chapter, Springer 2021

- Learning to Control Using Image Feedback - arXiv 2021

- Online Optimal Adaptive Control of a Class of Uncertain Nonlinear Discrete-Time Systems - IJCNN 2020

- Agent-Based Modeling of Communities for Understanding Equity Effects of Climate Change - AGU Fall Meeting 2024

- A Lightweight Decision Support Framework for Community Climate Resilience in Chicago - AGU Fall Meeting 2024